Manufacturing at the Edge

Przemek Tomczak, SVP, IoT & Utilities, KX

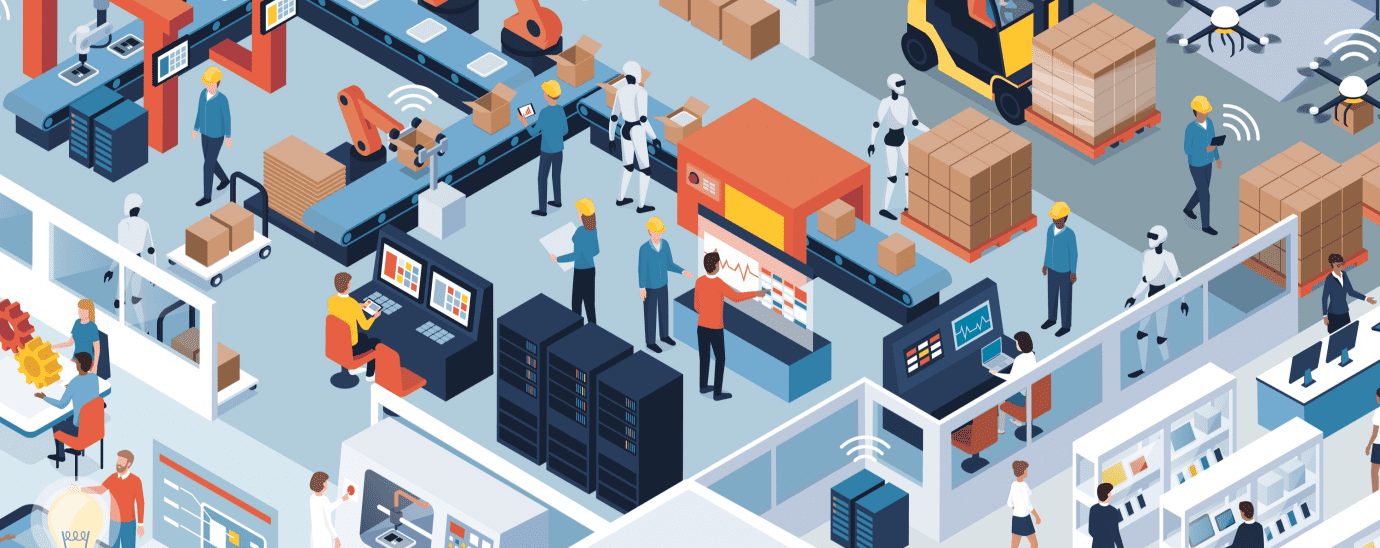

Companies are grappling with the enormous challenges that data and automation are presenting in today’s modern manufacturing environment.

Organisations know that the faster they can act to improve processes and lower costs, the more competitive they can be. The question is how to manage the ever-increasing volumes, variety and velocity of data being created, much of it from connected devices ‘at the edge’.

And the numbers are huge. A modern connected factory will create millions of data points a second from sensors in machinery. In fact, a recent report by Statista estimates data volumes from IoT devices alone will hit 79.4 zettabytes by 2025.

While edge computing – which the Industrial Internet Consortium defines as having the capability to conduct computing at the source of where it needs to take place – is undoubtedly revolutionising the industry, it’s also asking questions of legacy technologies and systems that are struggling to cope with the data deluge.

At the edge of the cloud

Certainly, the case for edge computing has never been stronger. In a sensitive industry such as manufacturing, where even the smallest degrees of latency can disrupt machine lines; reducing quality and output and costing millions in lost production time, being able to act on insights from the data in the shortest possible time frame is vital.

Cloud computing has also proved to be a transformational technology in manufacturing; providing significantly cheaper storage and processing capabilities while also giving rise to a vast marketplace of software and services and that manufacturers can use to further enhance their processes and productivity.

However, concerns around security, reliability and latency remain; a data management architecture which contains a hybrid of edge computing and centralised data management, either on-premise or the cloud, is therefore gaining prominence as a preferred manufacturing use case. Together they bridge the worlds of big data and fast data; allowing manufacturers to manage the massive volumes of data being created while providing a means to bring historic and real-time data together for deeper levels of insight and understanding

Automation driving adoption

That deeper understanding is critical to implementing what many see as the ultimate aim of edge computing, machine-to-machine communication and autonomous computing to achieve a state of self-awareness and ultimately create self-learning machines for better control of manufacturing processes, increased uptime, yield and useful life of assets.

Streaming analytics is the technology that enables automation, allowing manufacturers to collect and analyse data in real-time at the edge of their network as well as at the data center, while comparing it to historical records and context.

This process also takes advantage of the 3 critical needs of data management. The ability to ingest the enormous amounts of data, the ability to analyse it in a low latency environment and the ability to utilise machine learning to link or correlate multiple new and historic data sets together to generate valuable actional insight in real-time.

Together the rise of edge computing coupled with a Streaming Analytics software solution is enabling ‘microsecond’ decision making that ensures high-tech manufacturing organisations are able to keep their equipment and product lines constantly monitored and running efficiently.

As we head towards industry 4.0, and an ever-increasing number of connected devices within the manufacturing sphere, those that do not have the right data processes in place will be unable to continue to improve productivity and efficiency through better user of analytics.