How better data management cuts costs and improves efficiency

In the current challenging economy, all businesses are trying to find ways to reduce costs and create efficiencies. IT teams are by no means immune, but not all IT costs are easy to reduce: some, like cloud storage, are seen as a necessary cost of business. Analyst firm Canalys expects worldwide cloud infrastructure services expenditure to have risen 28% year on year in the third calendar quarter 2022, due to a range of factors including inflationary pressures and rising energy prices. Because of this, IT must look at the immediate returns on investment – of both money and time. One overlooked way to achieve rapid ROI is to look at a modern data management strategy.

Reduce data volumes

An organisation will often be paying too much for storage because it holds onto far too much data. And much of these data volumes will be the same data that’s duplicated across employees, business units and sat in siloes. Classifying data and deduplication is often pushed down the action list because it’s complex and the annual costs of storage are often dismissed as a tax of running the business.

The truth is that much value can be derived from classifying and deduplicating data. This means for organizations to classify the data first, and deduplicate only that which is required to be retained by the company, to make this process as cost/time efficient as possible.

Switch off old hardware

Decluttering and streamlining how data is stored and managed can reduce the amount of physical storage required, and simplify how and where data is stored. When the whereabouts of data is fully understood, just one “single source of truth” for every datapoint exists. In this situation it should be possible to begin reducing legacy hardware from the IT environment. There is less storage and server needed and organisations have moved past the point of paralysis over what stays or goes because they now know what data is stored where.

Simplify and automate workloads

As a consequence of those first steps in data de-dupe, tasks which were once complex – such as classification, data aging, tiering (for lowering cost), Ops efficiencies, basic hygiene and security patching – can be simplified, and it may be possible to automate workloads which previously needed manual intervention because of data complexity. All of these outcomes mean that the IT Team time can be freed up for other tasks, and that the parts of the business which use the streamlined systems get what they need faster – and perhaps even with greater assurance of accuracy too.

Streamline storage

Backups should be included in the quest to reduce data volume because modern, cloud-based backup systems can reduce costs significantly. There are also operational benefits, such as allowing for a range of search and restore options that aren’t available with legacy backup infrastructure. A report by Forrester found organisations achieved a 66% reduction of backup and data management costs with modern solutions versus legacy vendors.

Classification of data, mentioned earlier, has a key role to play in streamlining storage, improving cost efficiencies, enabling your sustainability agenda, and giving you predicable technology trends. If you classify your data according to your Relevant Record Strategy, you will be able to make a defensible decision about it. You will know what you need to keep, and where, allowing you to utilise primary, nearline, and offline solutions to maximise efficiency. More importantly though, you will only store that which you need to store, enabling defensible deletion decisions which will quickly control spiralling data growth.

Reduce security and compliance risks

Deduplication and classification will also help to stay compliant with regulation around personally identifiable information, such as HIPAA, GDPR or other national rules[CG1], as well as other regulations, such as DORA, that set out operational resiliency requirements.

What’s more, a better understanding of data means reduced security vulnerabilities. If you do not know where data is or what it is, then you have no idea of its importance or if it’s been compromised. Once you know where your data is, what it is, and how secure it is, you reduce its vulnerability rating, which also allows you to prioritise any patches required for vulnerabilities to a priority order of rollout across the organisation, rather than a basic rollout schedule. Going further, the compression, deduplication, encryption, classification and shorter backup times from modern data management enables much faster recovery at scale and improves the future spend profile, as you now know exactly what you are keeping, where and for how long.

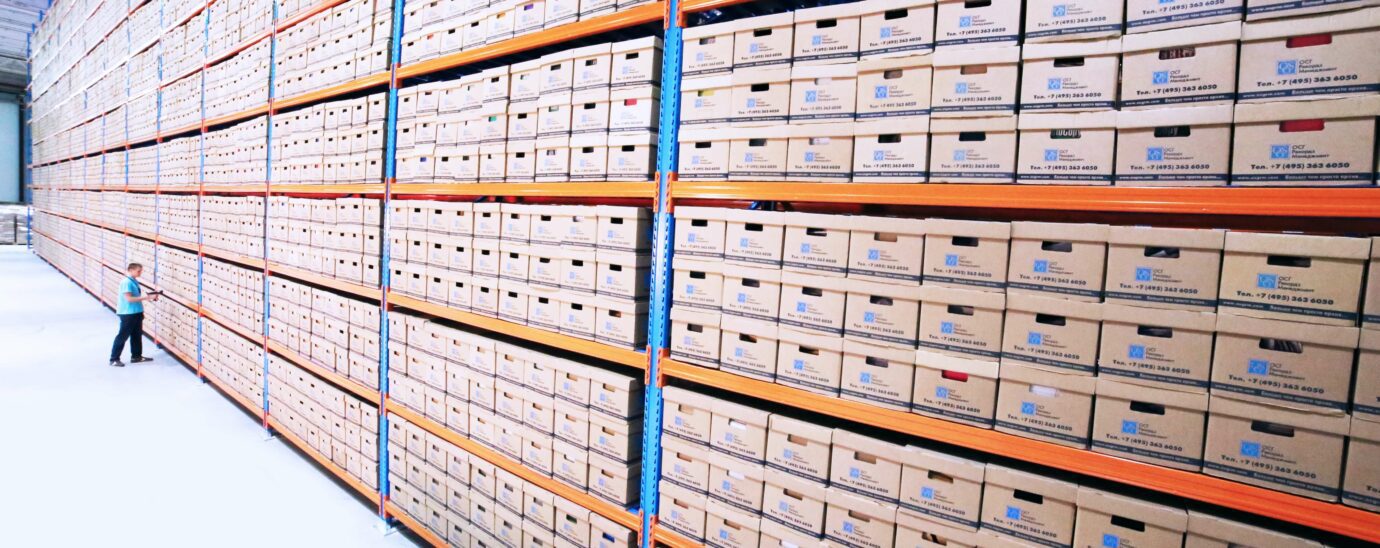

Index old tapes for faster retrieval and reduces compliance risks

Holding legacy data on tape may be important for legal or compliance reasons, but so is the ability to find and restore specific records. Too often, organisations save everything instead of coming up with more sensible archive strategies. Instead indexing and classifying the records needs to be a priority.

Forrester’s report noted that the organisations it interviewed saved hundreds of thousands of dollars on proprietary hardware, maintenance and off-site storage related to tapes, by storing the tape’s contents in the cloud instead. All risk of the loss or damage of the physical tapes themselves is mitigated by moving their data to cloud, data can be indexed making retrieval easier, and as the process of conversion to cloud is provided by the vendor, there are no legacy hardware costs to consider. Classification ensures you only move (and keep) the data that you need to.

Whatever else a business might do with its data, it certainly won’t stop generating more. Implementing measures to manage data better can inevitably improve efficiency and reduce costs. At a time when the economic situation is challenging, this could prove a blessing. Maybe it is time for data management strategies to bubble to the top of the to do list, because getting best practice in place today will streamline how data is collected, stored and used tomorrow.