Could Project Soli revolutionise IoT?

Credit: Google

Does Google’s new IoT innovation get a wave of appreciation or a big thumbs down? We take an in-depth look at Soli and what it means for the technology world.

Flagging cabs or giving thumbs up, gestures are a key communication tool for our species. Hand movements can speak for us, so could they be used in IoT?

We’ve come a long way in 20 years or so. The keyboard/mouse combo reigned supreme until touchscreens came along, with not even the iconic iPod scroll wheel able to displace it. Now, we have voice assistants like Alexa and Siri, capable of answering back to our requests. It’s inevitable that devices will expand beyond senses and read brain waves in the future, but technology that recognises our movements is a big step for IoT.

Google’s Project Soli could be game-changing. The concept is for devices to recognise hand gestures, without you actually having to touch the screen. Picture a smartwatch, for example. Instead of touching the watch face – remember when you never had to touch your watch to see the time? – you instead act out the motion of pressing a button, turning a dial or using a slider with your thumb and index finger. These virtual gestures will be based on things that you’d do in real life, so they’re easy to remember and user-friendly to integrate into devices.

Soli can work out complicated finger movements and unusual hand shapes, processing signals whether they’re subtle or not.

Hand gestures are a logical step forward. They remove the element of having to physically tap devices, allowing you to take a step back: perhaps we will be a little less dependent on our gadgets as a result. Touchless gestures are easier to operate in a loud environment than a voice assistant. It doesn’t eliminate seeing results in front of you either, in a way that voice assistants do when they read commands to you. “Gesture IoT” is, all things considered, the sensible bridge between complex VR worlds beamed from a headset onto your surroundings and our current smartphone ecosystem.

Soli technology could be coming sooner than imagined, too.

How does “Gesture IoT” work?

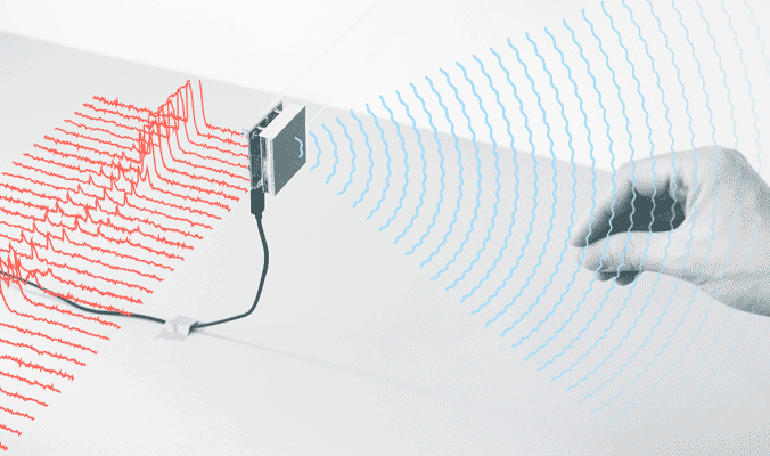

Soli is a motion sensor. The sensor tracks motion in front of it and converts the signals into commands.

It does this by emitting an electromagnetic beam, which then hits your hand. Whatever the beam hits is reflected back at Soli’s antenna; the energy, size, shape and distance of your gesture is then measured with minimal latency. The Soli chip measures in at just 8mm x 10mm, so this tiny technology is reacting in real-time to whatever you’re doing.

Soli’s spatial resolution is what Google describes as, “coarse”. This means that the tech can work out complicated finger movements and unusual hand shapes, processing signals whether they’re subtle or not. The software itself consists of different stages of signal abstraction, so from core machine learning (ML) features right through to detection and tracking, the Soli software can work with different types of gesture.

The Soli SDK gives developers the ability to build upon the pre-existing gesture recognition pipeline. Through various prototypes, Google redesigned and rebuilt the radar system from a much larger unit of off-the-shelf components and cooling fans, into a device that could be integrated into mobile consumer devices.

There are two modulation architectures: a Frequency Modulated Continuous Wave (FMCW) radar and a Direct-Sequence Spread Spectrum (DSSS) radar. Multiple antennae enable 3D tracking and imaging.

What IoT devices will Soli be used in?

The Google Pixel 4 marks a change in the way that smartphones are hyped.

Getting ahead of the inevitable concepts and fan-designed devices that flood the internet each summer, Google chose to leak its own handset. Fitting perhaps, in a week in which alt-rock legends Radiohead decided to leak their own demos when hackers held them at ransom.

Google’s gift to tease fans did nothing to quell rumours about Soli, however. There are still whispers that the next version of the handset will come with motion gesture technology, which would really shake up the market at a time when 5G is about to sweep through the industry.

Smartphones are just the start of what will become an extraordinary aspect of IoT.

It’s already illegal to use a mobile phone whilst driving. It’s not too much of a stretch to imagine cars soon being fitted with “Gesture IoT”, whether they’re autonomous vehicles or not. Wearable tech suddenly becomes a lot easier to control if all you have to do to use them is wave your hand. That doesn’t just go for watches either, but headphones that you can adjust the volume of. Google has suggested that Soli could be used in speakers pretty soon. How long before we see television and gaming that incorporates “Gesture IoT” like in Black Mirror episode Fifteen Million Merits?

Then there’s the shape recognition aspect of the technology. This could be used in shops such as Amazon Go to detect whether an item has been picked up or placed back. The touchless element too is particularly useful for hospitals, restaurants or places where contamination is a worry.

When you start to consider the possibilities of Soli, there is limitless potential for how this noninvasive technology can be harnessed.